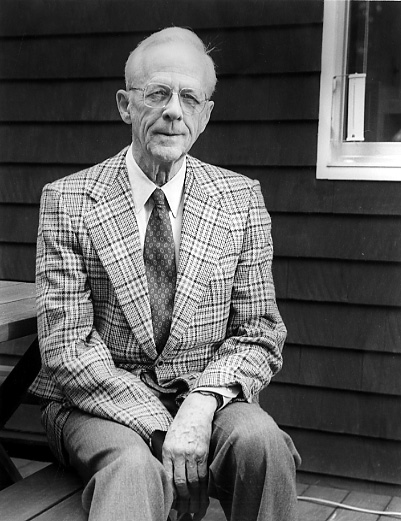

Dr. Jay Forrester

29 Kings Lane

Age 76

Interviewed December 6, 1994

Concord Oral History Program

Renee Garrelick, Interviewer.

Dr. Jay Forrester was a graduate electrical engineering student in 1944 at MIT when he was asked to design a flight training project for the Navy, which became Project Whirlwind. The work on aircraft stability and control analysis for the project converged in time with the development of digital computer technology and became the key to a national air defense system that laid the groundwork for the birth of the minicomputer industry. Project Whirlwind and Dr. Forrester's contribution resulted in the first digital computer that could be put to such practical uses as controlling manufacturing processes and directing airplane traffic.

I joined Gordon Brown along with two other people, and the four of us started the Servomechanisms Laboratory at MIT. Gordon Brown was the director, and the work was devoted to developing remote control servomechanisms for Army gun mounts and for Navy radar units. These control systems would take a very weak signal from an analog computer or director and then would position either the gun mount or radar set to a corresponding position, and so these were devices used to position heavy military equipment based on small controlling signals. That work continued through World War II. Most of the work that I did was with hydraulic oil-driven equipment, rather than electrical, because at that time the Army in particular was very suspicious of electronic equipment and did not trust it and would not use it except in radios where it was required. They much preferred that their control systems be made with mechanical hydraulic equipment.

I joined Gordon Brown along with two other people, and the four of us started the Servomechanisms Laboratory at MIT. Gordon Brown was the director, and the work was devoted to developing remote control servomechanisms for Army gun mounts and for Navy radar units. These control systems would take a very weak signal from an analog computer or director and then would position either the gun mount or radar set to a corresponding position, and so these were devices used to position heavy military equipment based on small controlling signals. That work continued through World War II. Most of the work that I did was with hydraulic oil-driven equipment, rather than electrical, because at that time the Army in particular was very suspicious of electronic equipment and did not trust it and would not use it except in radios where it was required. They much preferred that their control systems be made with mechanical hydraulic equipment.

At one stage a control unit that I had designed had found its way to the Pacific. In fact, the history of the occasion came about because we had built an experimental control unit to go on an experimental radar that the Radiation Laboratory had built to demonstrate the possibility of directing fighter planes to incoming enemy aircraft that were approaching the fleet. The experimental control system had been put on the experimental radar just to demonstrate the principles that were involved when the captain of the U.S.S. Lexington was brought for a tour of the Radiation Laboratory, shown what they were working on and told in six or nine months there would be production units of that sort available. According to the story, he said "That's too long, I want that one right there put on my ship now." Nobody had expected that these experimental units were in fact going to be used immediately. He prevailed and got the equipment on his ship, and it operated in the Pacific theater for six months or more. At which time some difficulties arose, and I volunteered to go out and see what the trouble was and try to remedy it. Not knowing what the real problem was, I packed up everything that I thought I would need, all the spare parts and the tools in a rather large foot locker that was almost solidly packed with metal and weighed about 230 lbs. I took this as baggage on an ADC3 on a 24-hour flight between Boston and San Francisco. One of the very amusing sidelights was the baggage people looked at this, and it was printed on it 230 lbs., and they laughed and considered it a great joke, and grabbed a hold of it, and nothing at all happened because it really was 230 lbs.

Anyway, having worked on the unit in Pearl Harbor and not being entirely finished with what I was doing, they invited me to go along for what turned out to be the invasion of Tarawa and a turn down through two chains of the Marshall Islands that were still in the control of the Japanese. The objective was to bomb the Japanese air fields and the objective of the Japanese was to resist these, and so there was an all-day air battle. About 11:00 that night as the task force was leaving the area, the Japanese succeeded in hitting the Lexington with one torpedo, which cut off one of its four propellers and made a hole in the side making it less than fully maneuverable. It eventually did go back to Pearl Harbor for minor repairs and then for full repairs.

In 1944 I was considering leaving MIT and perhaps going into some industrial company in feedback control systems when Gordon Brown showed me a list of a number of projects that were taking shape or available that one might work on. Out of this list I picked the one related to the development of a stability and control analyzer for aircraft that Admiral Louis deFlorez had asked MIT to consider. This had originally been directed to the aeronautics department and they had come to Gordon Brown in the Servomechanisms Laboratory, and through that connection I began to work on an analog computer to solve the equations of the motion of an airplane. Before this, there had been aircraft flight trainers. Link Company and Western Electric and others had made cockpits that would behave like known aircraft. The objective in this project was to take wind tunnel data from a model of an airplane and create a realistic aircraft feel so that pilots could judge the aircraft before it was actually built. We worked on this for one year and decided that the analog computer idea was not going to be satisfactory. Through discussions with Perry Crawford, who worked at the Special Devices Center of the Navy and who were sponsors for this program, we decided to shift over to the newly emerging field of digital computers. The advantage of the digital computer was that it would get away from the most serious disadvantage of the analog machines. The analog machines were themselves imperfect mechanical and electrical devices, and if you put a large number of them together, you weren't sure whether they would be solving the problem you gave to them or simply solving the consequences of their own idiosyncrasies as they interacted with one another.

A clock would not be a very good point of departure as a comparison because it counts the swing of the pendulum and therefore it is somewhere in between the analog and digital equipment. The digital equipment was based on computing with numbers and the analog equipment based on computing with the positions with mechanical shafts or the voltages of electronic circuits, and any of these electromechanical devices have a rather limited range of sensitivity in their own internal noise, and uncertainties are apt to be large compared to some of the computations you want to make so they are difficult to use in large numbers. But the analog computers had preceded digital computers by a good many years. They had been the first computing devices to be used.

Robert Everett started with me around 1941, and worked with me in the Servomechanisms Laboratory when we were working on remote control devices for gun mounts and radar sets. He then continued with me as we started the project for the aircraft stability analyzer, which then became the Digital Computer Laboratory at MIT where I was the director and he was associate director. It was in the Digital Computer Laboratory along with discussions and inspiration from Perry Crawford in the Special Devices Center that we began to see the opportunity to use digital computers as combat information centers to handle the complicated flow of information in a military situation. This then led us into high speed computers that had to be very reliable and the Whirlwind Project that took shape was characterized by its good engineering and its search for highly reliable circuits. A lot of the other computers at that time, and there were several other digital computer projects going on at that time, were devoted to scientific computation where if the machine stopped working, you could begin over or do the job tomorrow. In the kind of applications that we were anticipating, the computer was part of a real time ongoing situation and would have to be highly reliable.

It was an unusual group of people that worked on the project. Most of them had come, as Robert Everett and I had, as graduate students into the electrical engineering department. I think the esprit de corps came from the atmosphere of the laboratory, the extent to which high responsibilities or major responsibilities were given to very young people who managed to rise to those challenges, and where they could immediately see themselves making a reputation and progress. They would work on a circuit in the laboratory and then go directly to the big International Institute of Radio Engineers convention in New York and present a paper on what they had been doing before a national and international audience, so they saw themselves as very important leaders in a new pioneering field.

The name Whirlwind came from the Special Devices Center and possibly from Perry Crawford whom I have mentioned. They had several computer projects of various sorts. Some of them were analog and some of them were digital, and they decided to name them after various atmospheric disturbances, so there was the cyclone project and the hurricane project and some others and the Whirlwind Project. It was one of several Navy computer projects.

It was started by the Special Devices Center which was located on Long Island and was devoted to pioneering unusual pace-setting military equipment. That's where the original work was sponsored. All the digital computer work and the aircraft analyzer work was done after World War II. The Special Devices Center was merged into the Office of Naval Research in Washington in 1946. Perry Crawford stayed in the picture, and he did a great deal of the fundraising and promoting of the idea of digital computers in the Navy. He worked at every level from the Chief of Naval Operations up and down the line. He was quite uninhibited and moved around to keep people focused on the possibilities of digital computers.

Officially, the work began in late 1944 and the analog computer part of it ran through 1945, and the shift over to serial digital computers was roughly 1946. We decided that serial digital computers, which were very popular at that time because they were relatively simple, would not be fast enough. So by about 1947 we had shifted over to the parallel digital computer idea as the only way to get the speed that would be necessary for the problems that we were interested in.

In 1948 we had written two memoranda about the possibility of the digital computer handling the combat information flow in a naval task force, taking information from aircraft, taking information from surface ships, information from submarines and putting it together so that the whole picture could be seen. That led to a project with the Air Force on air traffic control. We had a small project for a period of a couple of years, the idea of applying digital computers for civilian air traffic control.

While that was going on, the Soviet Union fired its first atom bomb, and there began to be concern about the air defense system, the possibility of Soviet attacks over the North Pole with aircraft. That became the driving force for looking for an improved air defense system because it was becoming rapidly evident that the manual handling of information would not be satisfactory in terms of high speed aircraft and modern weapons. That concern then was behind a project that was headed up by George Valley, an associate professor of physics at MIT. He began to look into alternative methods, and he was directed to come and see me and together we discussed the situation. I proposed to him what we had already proposed to the Navy that digital computers could provide the information analysis and consolidation that would be necessary in such an application. This was very radical, very daring and something that the Air Force would not dare to simply endorse and proceed.

So the upshot result was the creation of what was called Project Charles, which ran for the better part of a year, to look at the shortcomings of the existing air defense system and search for alternatives. They could quickly see that the existing systems were not adequate and about the only proposal for what to do about it came from George Valley, Bob Everett and me, and by that time we were able to actually demonstrate using Whirlwind computer. The computer would bring in radar data about a bomber and automatically compute the instructions to intercept and send those instructions by radio to the autopilot of the fighter plane. That had all been worked out between several laboratories so during the existence of Project Charles we demonstrated the nature of the proposed system. This was about the only proposal they had to go on, and so they ended up endorsing the idea of an air defense system with digital computers at the various centers for handling radar information and issuing defense orders.

The work up through Project Charles was done at the Digital Computer Laboratory where Whirlwind was located at 211 Massachusetts Avenue, which was the building opposite the Necco factory and the building where presently the Graphics Department of MIT is located. That whole building was devoted to Whirlwind at the time, and Whirlwind was running there and the demonstrations were run from that location. The result of the Project Charles study led to the creation of the Lincoln Laboratory. Buildings were built in Lexington, and in due course the Digital Computer Laboratory on the MIT campus moved and became Division 6 of the Lincoln Laboratory. I was Division 6 Head and Robert Everett was my Associate Head, and I believe it was the largest division of the Lincoln Laboratory. There were six divisions. We were the ones in charge of designing what became known as the SAGE defense system (Semi-Automatic Ground Environment) with 30 some control centers in North America bringing data and analyzing and computing defense instructions.

Ken Olsen came in as a research assistant while he was working toward his masters degree and was a very top-notch engineer with considerable ability in the area of reliable equipment and a great deal of initiative in being able to get work done. Whirlwind had been a big project that we worked on for several years. We had also, to facilitate the research, developed a line of what we called "test equipment." These were digital building blocks, each a different kind of digital circuit, so called flip flop that would remember amplifiers. The various building blocks of a digital system had been built as separate units or separate panels so that one could plug them together in any kind of configuration to test the things that went into Whirlwind. We then came to the point where the random-access-coincident-current memory that I had invented as a memory system was ready to be given a full scale test. We wanted to make it a very realistic test. Norman Taylor who was chief engineer and Kenneth Olsen who worked for him suggested that they would make a full-scale computer with about the capacity of Whirlwind out of this "test equipment" and use it to test the magnetic core memory. I must say I doubted that they could do it and certainly not in the nine months they said it would take, but they came very close to in fact having the so called memory test computer built in about nine months, and it was used to try out the random-access magnetic core memory which turned out to be very successful. Within a couple of months after that, we moved it into the Whirlwind computer to replace the electrostatic storage tubes that we had been using for memory but which were expensive and short-lived and not very reliable.

The ideas that became the magnetic core memory evolved over a period of two or three years. At the time, people were desperate for memory for computers. All kinds of things were being tried. As an interesting sidelight, we seriously considered renting a television link from Boston to Buffalo and back so that we could store binary digits in the transit time that it would take to make the round-trip on the television channel. This is just an illustration of how desperate people were to explore every possibility in electronic memory. If one looked at the existing memory systems that were being undertaken, there were what I would call the linear ones, the single line memories of the mercury delay line. The mercury delay line, being the one that was actually used and developed and was reliable, would take about a meter long tube of mercury. You would have a piece of electric crystal at one end where you would put shock waves into it, and another crystal at the other end where you would pick these up, and the transit time in the tube was about a millisecond, a thousandth of a second. You could put maybe something like a thousand of these shocks that were either present or absent in the tube traveling down the tube and then picked up at the far end and then retimed, reshaped and put in at the beginning end. You could keep this whole chain of a thousand binary digits circulating in the delay line and that worked. But it was slow in the sense that you could not get at something stored in the delay line until it came out the far end. A millisecond represented the access time, which is very slow as computer circuits go.

Then there were the two dimensional storage units which were mostly cathode-ray tubes of varying designs where you would store on the inside of a cathode-ray tube dots that could be charged plus or minus and scanned and picked up again. In general, those were rather unreliable and had a short life, but they were used. I began to think that if we had one-dimensional storage and two-dimensional storage, what's the possibility of a three-dimensional storage. In thinking about that in about 1947, I arrived at a logical structure that satisfied the idea, but it was built around devices that were themselves not really practical. The devices were essentially little neon tubes, glow tubes, which have a high degree of nonlinearity which you must have for a memory system where you would have to put on a rather large voltage to make them glow, but they will continue to glow as you reduce the voltage down to a relatively low voltage. So you have a system where you can cause it to fire and then you can reduce the voltage and it will continue to fire. This lent itself to a coincident-current kind of arrangement where you could activate a wire say in the "x" axis and another one crossing in the "y" axis and only the glow tube at the intersection would have enough voltage on it to break down and begin to discharge. Logically, this was the basic idea. Practically, it again would be slow but more importantly the characteristics of glow discharges change with temperature and age, and we never really tried to build a full system. We did tests on individual units but on the whole it didn't seem to be going anywhere and we didn't really pursue it. It was then about two years later in 1949 in looking at advertisements for magnetic materials that had been used by the Germans for magnetic amplifiers in their army tank turrets, magnetic material having what is called a rectangular ??? tube piling on linear, that I began to ponder whether or not that could be incorporated into the logical structure that I had worked with before. Over a period of two or three months we developed how that could be done and then over the next three years or so, we in fact brought it to the point where it was a working, permanently reliable system. It was used from the mid'50s to the mid'80s, about 25 years, in essentially all digital computers until it was replaced by the integrated circuits that are used today.

In Whirlwind there were more things, more ideas that have continued to the present time than in any other computer at that time. It had the magnetic core memory which dominated the field for perhaps two decades, it was a high-speed parallel machine which is true of today's computers, a lot of them at that time were serial machines, it used cathode-ray tubes driven by the computer and ways of interacting between the person and what you saw on the computer. We didn't use the mouse that people use today, we used a light gun that you would hold over the face of the tube where you wanted something to happen, and it would pick up the light from the tube. It served the purpose that you now get with a mouse interacting with the computer. And a number of other things that I would say made it a pioneer for a lot of what is going on now. It was of very high reliability. The emphasis throughout had been on reliability.

The SAGE air defense centers had maybe between 60,000 and 80,000 vacuum tubes in each one in a building four stories high and maybe 160 feet square. Those systems operated from the mid to late 1950s up until the early 1980s. The historical data on the performance of those centers show that a center was operational about 99.8% of the time. That's better than today's computers will generally do. Partly it was because there were two computers in each center where you could trade off between them but also it was largely due to two things. One, we had raised the life of the vacuum tube in one design step from 500 hours to about 500,000 hours, something like a thousand fold increase in the life of a vacuum tube by finding out why they were failing and taking away the cause. Then second, we had added another factor of ten or more in reliability by a marginal checking system that would allow you to find any electronic component that was drifting toward the point of causing an error before it did cause an error. So one could always be monitoring the machine, the entire system, for anything that might be drifting or changing its characteristics.

There were two major Air Force projects at one stage in the early 1950s. One which was presumed to be a quick fix and one that was to depend upon existing largely analog computers at the Willow Run Laboratory, University of Michigan. The people were proposing, for a relatively small sum, $300,000,000, that they could both design and install a much improved air defense system. We at the Lincoln Laboratory believed that they were grossly underestimating both the cost and how long it would take them to do it, but we took the position that if people of good reputation think that that can be done, the Air Force should continue to support it, but they should also support the work at the Lincoln Laboratory which was on a solider basis that would take longer but be better. In a matter of two or three years, it was evident to anyone walking through the two places that the long-term project was ahead of the short-term project at Michigan, and eventually the Air Force canceled the Michigan project and diverted what would have been production money for Michigan to finish the research and development at Lincoln Laboratory. Of course, the air defense system cost a great deal more than had been originally estimated.

As we developed a computer design for the air defense system, one, of course, would need a manufacturer to actually produce the equipment. As that time became near, we sent out requests to a substantial number of companies, probably 15 or so, seeking any expression of interest to be considered. Perhaps five companies responded that they did want to be considered. A team consisting of me and several of my associates visited these companies in some depth, a very penetrating visit, to see who looked the most able to carry off what was an entirely new kind of project. There was no precedent. There wasn't anybody building digital computers at that time. As a result of that survey, it was clear that IBM was far ahead of any of the others as a possible company to do the work, so we recommended them to the Air Force. We subcontracted with them to work with us on design and building the first prototype. The Air Force then contracted with them for the building of the computers to go into the air defense system.

By 1956, the SAGE air defense system was essentially cast in its direction. The first of 30- some computer centers was nearing completion in New Jersey. The designs had been frozen. The organization to carry out the installations had been set. The computer programming for the air defense system which was started at the Lincoln Laboratory was turned over to the Rand Corporation, which created a new corporation, the System Development Corporation to do the computer programming for the system. There were now many organizations and large numbers of people working on it. There was an opportunity for things to begin to change for me. I felt at that time that the pioneering days of computers were over. A lot of people today might find that surprising. In fact, I think, in the decade from 1946 to 1956 computers advanced more than in any decade since, although every decade has had tremendous advances.

I decided that I would do something different. Out of a happenstance discussion with James Killian, who was then President of MIT, he suggested that I should consider the new management school that MIT was starting. It seemed appropriate to do something else. Robert Everett then became Head of Division 6 of Lincoln Laboratory. In time Division 6 was separated from the Lincoln Laboratory and became the MITRE Corporation, and after one or two changes Everett became President of the MITRE Corporation and stayed there until his retirement.

I went to the MIT Management School, partly to try to fulfill the vision of Alfred Sloan. Sloan had given ten million dollars to MIT to start a management school. Sloan had a feeling that a management school in a technical environment would develop differently from one in a liberal arts environment like Harvard, Chicago or Columbia, maybe better, but in any case different, and it would be worth ten million dollars to run the experiment and see what would happen. The school had been officially started in 1952. It had existed in name for four years before I joined it. There hadn't been anything done in terms of what it meant in the MIT setting. It was getting organized to teach rather typical management subjects. I think others believed and perhaps I did too that I would either work on the question of how business should use the newly emerging digital computers or the field of operations research which had already been defined. It existed then pretty much as it does now. It would be one or the other of these.

I had my first year at the management school with nothing to do except try to decide why I was there, and during that time, both of those prior expectations as far as computers in business use seemed to have a lot of momentum. The manufacturers were very much in the business. Banks and insurance companies were using computers. It didn't seem like a few of us would have an impact on the way that field was going, and the field of operations research was interesting, useful, probably worthwhile, but clearly was not dealing with the big issues of what it is that made the difference between corporate success and corporate failure. Out of that and out of discussions with various people in industry, I think that what happened was that my background in servomechanisms, feedback systems, computers and computer simulation came together to lead into what is now known as the field of system dynamics.

System dynamics deals with how the policies and structural relationships of a social or socioeconomic or sociotechnical system produce the behavior of such a system. The field started as one devoted to corporate policy, and corporate policy produces corporate growth and corporate stability. In 1969 it began to shift to larger social systems when John Collins, former mayor of Boston, and I worked together to apply the field of system dynamics to the growth and stagnation of cities. That work led into two directions. One, the use of system dynamics for studying the behavior of economic systems which I'm still involved in, and the other direction led to the work we did with the Club of Rome which led to my World Dynamics book and Limits to Growth book that dealt with how the growth of population in industrialization and pollution were coming against the carrying capacity of the world and was leading all of civilization into serious pressures and consequences. Now, more recently, I have been much involved in system dynamics becoming a foundation for kindergarten through 12th grade education. Not as a subject in its own right, but as a basis for every subject. So it is being used in mathematics, in physics, biology, environmental issues, economics and even in literature. I see that whole area of a public coming to understand our social and economic systems as really the frontier for the next 50 years. It is the new frontier or you might say the frontier of science and technology for the last 150 years. The frontier for the next 50 to 100 years will be the understanding of our social, economic, population and political systems.

For a number of years the Whirlwind computer operated in the Barta building at MIT. Then after commercial computers became available and were fairly widely used and there were a number of them at MIT, it became really too expensive and unnecessary to maintain Whirlwind, so it was put in storage. Then much to my surprise, Bill Wolf, who had a company here in West Concord and who always liked the machine, decided he would put it back into operation. I would not have expected that one could successfully reassemble it, but he did. He built a building here in Concord, and he got permission from the Navy to take the machine out of storage, put it back together and did use it for a period of time. He had a research company and he sold time on the computer. Then it got to the point where again he couldn't keep it up, and it was on the road to being junked. But then Kenneth Olsen discovered that it was about to be junked and sent trucks to rescue it, and took it back to Digital where I think parts of it were on display for a period of time. What is now the Computer Museum in Boston started at Digital Equipment Company. There are parts of Whirlwind there at the museum. Then parts of it went to the Smithsonian Institution in Washington where the last time I knew about it, there was a display on the first floor of the Smithsonian.

It was interesting and rewarding to be on the edge of technology in that post-World War II period. I think we were aware of the ground we were breaking at that time. In 1948 we were asked by Carl Compton, President of MIT, who was also head of the Research and Development Board for the military establishment, to give him an estimate of what the role of computers would be in the military. This was before any reliable high-speed, general purpose computer had yet functioned. There were unreliable computers and ones that weren't general purpose and there were ones that were slow, but there was nothing comparable to what Whirlwind was to become in all of those dimensions. Whirlwind had not yet run successfully. So we wrote a report for him on what we foresaw as the future of computers in the military for the next 15 years, which was from 1948 to 1963. That report which is still available ended up with a large page maybe 2'x3', 15 years across the top, a dozen military applications down the side and at every intersection we filled in with our estimate of what would be the status of the field, what would be spent in that year for development and what would be spent in that year for production. At that time, as I say, there were only a few experimental efforts in the field. Well, that culminated down in the right-hand corner with a total of something over a billion dollars for I think just the research and development part of it. We went into a meeting with the Office of Naval Research where they thought the agenda was whether we would be permitted to have another hundred thousand dollars and we suggested they would be spending two billion. So shall we say, there was a communication gap in that meeting. However, I think that forecast was closer to being right in percentage terms than what most corporations forecast today for how long it will take them to design their next computer.